SEO Audits: What You Need to Know First

An SEO audit is the first step in developing a measurable strategy. During the audit process you’ll assess how well your site follows SEO best practices, while you uncover issues affecting performance – issues such as: on-page elements; content quality; page load speed; internal, external and backlinking; and technical barriers to crawling and indexing. With the insights you’ve gathered, you’ll turn those into actionable items. Performing regular SEO audits is crucial to the longevity and success of your website.

This audit checklist will not define elements of an SEO audit, and why it matters – that’s because we covered it on another post already. And if you’re new to this, read that one before this one so you understand what these elements are.

Suggested Tools

To complete a comprehensive review of your site, you’ll need some tools. The good news is some of these tools are free, but the bad news is some aren’t. Also, some are kinda free depending on your site size.

Free Tools:

- Google Search Console, Google Analytics (GA4);

- Screaming Frog (up to 500 URLs per crawl);

- Ahrefs (some features are free, albeit limited)

Paid Tools:

- Ahrefs – I’ve said this in another post, I think it’s the best bang for the buck when it comes to SEO tools

- Other SEO Tools: SEM Rush; Moz; and even more budget friendly Ubersuggest

- Each tool has their pros and cons, but typically the more budget you go the less features, amount of data, and/or quality of data tends to go down too

Example of Ahrefs Backend

Table of Contents

Chapter One: Crawl Your Site Like a Search Engine Bot

Chapter Two: Site Speed & Core Web Vitals

Chapter Three: Mobile Friendliness & UX Audit

Chapter Four: On-Page SEO & Content Optimization

Chapter Five: Advanced Technical SEO Audit

Chapter Six: Off-Page SEO & Backlink Profile Analysis

Chapter Seven: Local SEO Audit (If Applicable)

Chapter Eight: Competitive Analysis & Benchmarking

Chapter Nine: Aligning SEO with Other Digital Strategies

Chapter Ten: Common SEO Issues You’ll Find (Cliff’s Notes)

DRVN Media – SEO Audit Checklist

This is an actual, no BS step-by-step guide on exactly how to do an SEO audit in 2025. This is the same checklist I follow that has helped me gain more keywords, and increase organic traffic on DRVN’s client sites.

*Blue arrow was a couple weeks after I started implementing optimizations

It doesn’t matter if you’re working on your own site, or you’re an SEO professional looking for a guide to follow – this checklist is for you. If you’re ready to improve your Google rankings, then buckle up, get comfortable, and enjoy the ride because this won’t be a short one.

Step 1: How to Crawl Your Site Like a Search Engine Bot

Crawl Your Site Using the Tool of Your Choice

I’m often asked why I like using a crawler such as Screaming Frog when I also subscribe to Ahrefs. And I’m going to be 100% honest with you, it’s because I learned to use Screaming Frog before I ever used the Ahrefs platform.

How to crawl your site like search engine bots and Google does will depend on what tool you’ve chosen to use. Here are getting started guides from Screaming Frog & Ahrefs. I apologize for not leaving you links to every tool that can crawl a site, but there would be so many I would have to list which is why I’m only listing those two. I regularly use those two tools, which is why I endorse them. If you need to find how to do it on your tool, simply Google “[SEO Tool Name] + how to crawl a site” and the first result should be a guide. If you don’t find the guide, then it’s likely the tool doesn’t have the feature, or they didn’t bother to create and/or optimize their own site.

Evaluate Your Crawl

I use Screaming Frog, and I export the crawl filtered for just the HTML pages as an Excel (or Google Sheets) spreadsheet. Then look at the following items:

- Title Tags – I create a tab for ones over 599 pixels (Title tags too long, about 55 characters – if optimizing with a bias towards mobile search you actually get 65-70 characters before it will truncate); a tab for ones Under 200 Pixels (Title tags too short, about 20 characters), and ones missing.

- Title Tags Too Long: I do my best to optimize to ensure the visible part of my title tag contains the most important information that will get a user to click. However, I don’t fear truncated titles (aka “the ellipses”), and if it’s important enough for Google to understand the context of my page I include it. However, this isn’t the same as stuffing keywords either. If you write a title tag that truncates, you need to periodically audit these to see if Google is changing it to something else in the SERP, oftentimes it’s the H1. If it’s not the H1, then it’s another header on the page.

- Title Tags Too Short: I do my best to use the space given to me before truncation too. While being concise is important, if your title tag is too short, then I find Google more often will replace it with a header element for sure. The infuriating thing is, sometimes the header is even shorter than your Title Tag but it contains the keyword of the user’s search.

- Title Tags Missing: I don’t think I need to explain this one, but just in case I will. The title tag is one of the easiest things to optimize that directly signals Google how you want your page to rank. In fact, I tested this on a site where I intentionally optimized the title tag to be something the page wasn’t – and Google still gave me the rankings. Despite high bounce rates, I still got page 1 rankings for the terms I used – crazy, right?! *How did I get this practice site? I have a client who had virtually no organic traffic when we started, and I don’t charge him much for my services. In exchange, he lets me run some testing on his site. Tread softly and have an ironclad contract when setting up a relationship like this.

- Meta Descriptions: I create a tab for too long (over 920 pixels, over 155 characters), too short (under 400 pixels, under 70 characters), and missing descriptions.

- I won’t go into too much depth here because meta descriptions don’t send direct ranking signals (probably a result of too many SEOs spamming keywords in this field in the early 2000s), and Google often (as much as 70% to 80% of the time depending on the search query type) overwrites your suggested meta description with on page text. (Hint, lots of the time it’s a short copy section if the page has it.)

- But don’t underestimate the power of a good meta description that entices users to click through. If you’re optimizing with a bias towards mobile search, just remember meta descriptions before it truncates is shorter than desktop (about 125 characters).

- Between title tags and meta descriptions – think of title tags as Batman, and meta descriptions are Alfred – so not primary like Batman, or even Robin, but still has an important function in the story.

Example Title Tag & Description in Google SERP

- Broken Links (404): These are URLs discovered on your site that result in a 404. Maybe you copy and pasted it wrong. Maybe you deleted a page without redirecting it. Maybe you did something else that created it. Whatever the reason, 404 links result in a bad user experience and are bad for your site’s SEO efforts.

- If you can, update these links to the correct URL, and 301 redirect the broken link to the correct one because you never know where, and who has shared the broken one.

- If you can’t determine what the appropriate URL should be, or where the link is on the page, then at a minimum redirect the user to the page you think is closest. Sometimes this happens and there’s nothing you can do about it. For example, I’ve had clients divests away from a product or category, and there isn’t another that’s exactly like it, and only ones kinda like it.

- Duplicate Content/Pages: Duplicate content can confuse search engines, making it difficult to determine which version of a page to index and rank. This can dilute SEO value, split ranking signals, and even cause important pages to be left out of the party on SERPs. Here are things I look for:

- URL variants such as query strings, AMP (accelerated mobile pages – note Google doesn’t use this as a ranking factor anymore), print friendly pages (I do come across these from time to time), and anything else that might create an unintentional duplicate page.

- This is where you’ll need to put on your thinking cap to decide if you need to implement a canonical tag to signal which is the preferred version of a page you want to rank, use a 301 redirect where necessary, or take some other route such as noindex tags, or robots-ing those URLs out. Unfortunately, I can’t give you a definitive answer for all scenarios because “it depends” and each case is different.

- Check for Proper Indexing: While the Site Crawl is running in the background, I like to look at the robots.txt (whateveryoursiteis.com/robots.txt), the XML sitemap, and once the crawl is done noindex and canonical tags. Most importantly, if these things aren’t done right, it can be a huge hindrance to search engines crawling your site, and ranking your pages effectively.

- Robots.txt: Ensure it isn’t accidentally blocking important pages, and/or sections of your site from being crawled. A recent example: Before this business was client, they had their site redesigned. Six months post launch they were wondering why they weren’t getting any organic traffic. Good thing, they hired me. Bad thing, they fired their dev-company because they pushed the robots.txt from their dev site to the live site during launch. The robots did what it was supposed to for the dev site, which was block search engines from crawling and indexing it. However, “Disallow: /” as an instruction on your production site means the same thing.

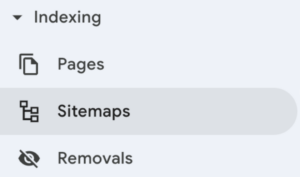

- Sitemap.xml: The majority of the time your CMS (or a plugin like Yoast for WordPress) will dynamically create the XML sitemap for you. However, I’ve audited sites where this wasn’t happening. It’s always my recommendation to have the sitemap.xml dynamically update as pages are added and removed from your site. However, if you need to manually create one, then you should update this each time you add or remove a page from your site. Luckily, creating an XML sitemap is built into Screaming Frog. The address of your sitemap should be listed on your robots.txt, and submitted to Google Search Console (screenshot below on where to find that in GSC).

-

- Canonical Tags: Once the crawl is complete, I like to review the canonical tags. This is to ensure those are implemented correctly to consolidate rankings for similar or identical pages to the primary URL. There are times you’ll come across canonicals that were implemented by those before you, so might have to look at historic data to see if you understand the logic.

- My best example was a major clothing client I had years ago at an agency before DRVN. They had 2 category product listing pages: 1 for all denim products, and 1 for all jeans. Originally, there was a canonical that pointed the page for jeans to denim. However, prior to becoming an agency client, they redesigned the denim page to be a content landing page that talked about the premium quality of their denim, their denim product lines, and links to the various PLPs. As a result I removed the canonical because the two pages no longer competed over the same search intent; one was a transactional page (jeans), and the other became an informational page (denim).

- Noindex Tags: A noindex tag tells Google, and other search engines that you want the page to be crawled, but you don’t want it to appear in search results. Sometimes this is intentional, but sometimes this is unintentional. It’s important for you to understand why a page is tagged noindex to determine if it was intentional, or unintentional.

- Canonical Tags: Once the crawl is complete, I like to review the canonical tags. This is to ensure those are implemented correctly to consolidate rankings for similar or identical pages to the primary URL. There are times you’ll come across canonicals that were implemented by those before you, so might have to look at historic data to see if you understand the logic.

Step 2: How to Analyze Site Speed & Core Web Vitals

Page speed and Core Web Vitals (CWV) is regularly mentioned in the same breath because they go hand-in-hand. The difference between the two is that “page speed” is the actual time it takes for a webpage to load, and CWV are the specific metrics that measure aspects of the user experience – such as how quickly content becomes visible, how stable the layout is when loading, etc.

As confirmed by John Muller (Google Search Advocate) both are ranking factors and plays a strong role in how your site ranks. When this was announced in 2020, SEOs freaked out about it forgetting it’s 1 ranking signal out of thousands. And if website A is faster than website B, but B is more relevant to the user’s search query, then website B would still outrank A. With that being said, it still doesn’t diminish its importance because websites moving from “failing or needs improvement” to “good” may see ranking improvement. The take away, it’s the degree of the improvement that matters. If it’s a B+ to A- you shouldn’t expect any sort of miraculous changes to your rankings. Now this is the frustrating part because improvements to page speed and CWV might require some assistance from a developer.

Do You Need a Developer to Improve Core Web Vitals (CWV)

There are several non-technical optimizations website owners, content managers, and/or marketers can implement without coding. Here are the ones most of you should be able to do on your own:

- Optimizing Images (LCP)

- Use next-gen formats like WebP instead of PNG/JPEG

- Keep image file sizes under 1MB at most – I actually aim for under 100kb if I can

- Use software to compress images you’ll use on your site – or use a plugin. Just be mindful of which one you select because the trade off could be a bulky plugin that needs to load

- Resize images to match their display size instead of relying on CSS scaling it

- Lazy Loading should be enabled in your CMS. If it’s not, add loading=”lazy” to image tags for any image that’s below the fold when a page loads. Newer versions of WordPress has this built in.

- Minimizing 3rd Party Scripts (FID & CLS)

- Remove unnecessary plugins (this is the curse of wordpress), and third-party widgets (chatbots, social share buttons, ad scripts)

- Replace resource heavy plugins with leaner alternatives when possible

- Use Google Tag Manager to control when scripts load

- Delay non-essential scripts so they don’t interfere with page load

- Yes you’re gonna need to look some of this up because we’re only on step 2 of an already long post

- Choose a Better Host and/or Hosting Plan (LCP)

- If your hosting is slow, consider switching to a performance optimized provider. I personally use WP Engine, but I have lots of clients who are on Cloudways or AWS. When it comes to hosting, often times “You get what you pay for”

- Use a CDN (most hosts include this as long as you’re not on their cheapest most budget plan). A CDN is a Content Delivery Network, basically components of your site are cached on servers all over the place, so no matter where the user is, your site won’t have a bunch of delays.

- An example, I have a US client whose parent company is in Europe. They had their site redesigned, but to save money the parent company decided to host it on their servers in Germany, and the dev company didn’t configure their CMS to utilize a CDN. The client was trying to figure out why it took so long for pages to load, and that was the reason.

- In the era of instant gratification, seconds matter. If your page takes over 3 seconds to load, you could have already lost over 50% of your potential users.

- Improve Font Loading (CLS)

- Use system fonts instead of custom web fonts to reduce loading delays

- Preload fonts (<link rel=”preload” href=”font-url” as=”font” type=”font/woff2″>) to prevent shifts

- Limit the number of different fonts, and weights

- Use a Page Speed Plugin (WordPress & Shopify)

- Plugins can handle caching, minification, and lazy loading with minimal setup, and this is why I typically recommend a reputable page speed plugin

- WordPress – I recommend WP Rocket to improve Page Speed on WordPress sites. It’s $59/year for a single site, which is about $5/mo if cost averaging

- Shopify – there’s an abundance of speed optimization apps to compress files and manage unnecessary scripts, but I defer this to the expertise of a Shopify developer. While I can, and do optimize Shopify sites, I don’t work on them as frequently as WordPress which is why I don’t have a recommendation

- For Sites Running Ads & Embeds (CLS & LCP)

- Ensure ad placeholders have fixed dimensions to prevent layout shifts

- Avoid dynamically injected elements above the fold that pushes content down

- Use asynchronous loading (async or defer) for embedded content like YouTube/Vimeo videos, and social media feeds

- Sites, such as editorial ones that rely on ad-revenue often times prioritize their ads over the user experience. While I get why, it actually can be counter productive because a poor user experience can negatively impact your SEO efforts. This means less users, less traffic, and less revenue from ad impressions. There are an abundance of alternative sites out there, and if you don’t put the user first – they’ll likely go elsewhere.

Step 3: How to Complete a User Experience (UX) Audit

Mobile Friendliness Test

We live during a time where most people walk around with a miniaturized computer in their hand that also works as a mobile communication device, and this is why your site being mobile-friendly matters.

However, it also matters to Google, and mobile-first indexing. Meaning, Google primarily uses the mobile version of your site for ranking and indexing, and this is because most searches start on mobile these days. And a poor mobile experience can hurt your rankings, traffic, and engagement. Ensuring responsive design, fast load times, and mobile-friendly navigation is essential for SEO success.

There are two ways you can execute the mobile friendly test:

- Lighthouse: Google retired their mobile friendly tool, and retired the one in Google Search Console. So using Lighthouse in Chrome is one option.

- Bing Mobile Friendly Test – I’m old school and like the simplicity of inputting a URL and clicking “analyze” which is why I use this free tool from Bing. I’ve tested all my clients’ sites on this tool and Lighthouse, and have yet to have any results that conflicted.

Screenshot of Bing Mobile Friendliness Tool

User Experience Audit

Improving usability and navigation enhances user experience (UX) and boosts SEO by keeping visitors engaged. Auditing for the user experience is a manual process that needs to be done on both mobile, and desktop. Here are the items I inspect on almost every UX Audit:

- Menu is easily found, and comprehensible – I’ve come across far too many sites that are small that have mega menus. If you’re not a major retailer, odds are you do not need a mega menu. Instead, you need to embrace simplicity “addition by subtraction.”

- Accessible buttons. There’s nothing more frustrating than finding a site I like on desktop, but hate on mobile or the other way around. Accessible buttons can include floating buttons, the menu button, etc. Navigating your site should be intuitive to the user on both desktop and mobile.

- Logical page hierarchy to make navigation effortless because it allows the user to scan for important sections of the page, and quickly comprehend content without having to hunt for it.

- Breadcrumbs help users and search engines understand your structure, and the relationship between pages.

- Core Web Vitals was discussed in length above. But simple changes can take you a long way, such as prioritizing above-the-fold content, lazy loading images, and limiting pop-ups can significantly enhance both usability and performance, which leads to a better experience for your users, and improves UX quality signals such as lower bounce rates which can improve your rankings.

Step 4: On-Page SEO & Content Optimization

On-page SEO and content optimization ensure your pages are both search engine-friendly and valuable to users. This step focuses on refining key elements like meta tags, headings, keyword placement, internal linking, and content quality to improve relevance and rankings. A well-optimized page not only attracts search engines but also enhances user engagement, leading to higher conversions and lower bounce rates.

Meta Tags

I listed Title Tags and Meta Descriptions up above in the evaluating your crawl section. So I won’t go into too much detail here. However, understand both are critical for SEO because Title Tags send direct ranking signals AND can impact CTR (click through rate), and Meta Descriptions can impact CTR too.

Title Tags (aka Meta Titles) Best Practices

- How to Write Title Tags: These should be written to include your primary keyword target, be compelling to entice the user to click, and as naturally readable as possible.

- Title Tag Length: Be as concise as possible, generally you get somewhere between 50-60 characters before it will truncate in the SERP. If you need to go beyond that, you can. But, you need to make sure the visible part resonates with your audience in search – so most important information first.

Meta Description Best Practices

- How to Write Meta Descriptions: While not a ranking factor, should provide a clear, engaging summary of the page, and use other keywords that describe what users can expect to find on the page. E.g. if you sell hoodies and used that term in the Title Tag, then perhaps “Hooded sweatshirt” could be used in the meta description because Google and other search engines will bold face terms and phrases it thinks is applicable to your search.

- Meta Description Length: Generally you get about 150-160 characters for meta descriptions before it will truncate on SERPs. I’ve found 9 times out of 10, if it’s under 155 characters, odds are it won’t truncate.

Title Tag & Meta Description Hints

- Can you include some sort of “hook” for the user to click your link? Such as, is your site running a buy one get one half off on a certain product category? Or, do orders over $50 ship to the user for free? Including these can motivate users to click on your links instead of your competitors’ links in search results.

- Avoid duplicate titles and descriptions across pages, and ensure each is unique, concise, and aligned with user intent to maximize visibility and engagement.

- Easy ways to save on pixel count is to use “&” instead of “and” or using “|” instead of a hyphen.

Title tag and meta description in Google SERP

Heading Structure

I like to follow a logical “cascading” hierarchy to my heading usage because it helps the user understand sections that belong together. It’s especially useful for long posts like this one. You’ll notice that I’ve only used one H1 for the post title, and you won’t find an H3 that’s a parent to an H1 or H2, etc. – each H3 represents the start of a new step, and H4’s are sub-sections of the H3.

In my H1 and H2, I use keywords that’s representative of my entire page. However, in the other headings, I use keywords for content that’s specific to that section.

Keyword Optimization / Placement

Using keywords is crucial for SEO, but overloading content with them (keyword stuffing) can harm readability, and hinder your rankings. It’s 2025, and keyword density is not a ranking factor anymore. Even though it’s not a ranking factor, ensuring you have the right cluster of keywords on your page is important. A keyword cluster is simply groups of keywords that represent searchers with a similar search intent. For example: sport cars; luxury sports cars; and blue luxury sports cars are all different keywords, but they all represent users who are looking for sports cars.

Diversify Keyword Usage

First, focus on sounding natural in your writing. Also, placing your primary keyword in critical areas such as: title tags, meta descriptions, URLs, H1 headings, and within the first 100 words or so of your content. Then sprinkle your keyword clusters (variants), and semantic keywords (e.g. baseball hat vs baseball cap) throughout the text to improve relevance without sounding unnatural. Maintain a conversational, reader-first approach, ensuring keywords flow naturally within sentences. While direct matching a keyword is helpful, Google’s algorithm is designed to understand natural language where it’s not a necessity to use a keyword as you see it in autosuggest or your SEO tools.

Internal Linking Strategy

An effective internal linking strategy helps search engines crawl and understand your site structure while improving user navigation. Each content page should have relevant internal links pointing to related content, or if it’s a “final destination page” then some call to action (CTA) you want users to perform. Internal links guide both users and search engines to important pages, and help you establish your EAT (Expertise/Experience, Authority, and Trustworthiness).

Using descriptive anchor text that naturally includes keywords, rather than generic phrases like “learn more” lets users know what they can expect to find on the page you’re linking to, and in turn helps your pages rank better. Prioritize linking to high-value pages such as pillar content, service pages, or in-depth guides to distribute link equity and improve rankings.

Additionally, ensure evergreen content pieces aren’t buried too deep in your site hierarchy, as pages that require too many clicks from the homepage may struggle to rank. A well-structured internal linking strategy enhances SEO, keeps visitors engaged, and helps search engines determine your most important pages so it can index those pages more efficiently.

Identifying Thin, Outdated, or Duplicate Content

Low-quality content can hurt your site’s rankings, reduce engagement, and waste valuable crawl budget. Thin content refers to pages with little value, common traits are: minimal text; auto-generated content (such as RSS feeds); or duplicate pages offering no unique insights. Use tools like Google Search Console, GA4, Screaming Frog, or Ahrefs to identify pages with low word count, poor engagement, high bounce rates, or high exit rates.

Outdated content can also weaken your SEO. Look for pages with old statistics, irrelevant information, or declining traffic, and update them with fresh data, improved readability, and enhanced media like images or videos. If a page is no longer relevant, consider redirecting it to a related, stronger page or consolidating multiple weak pages into a comprehensive resource. And this part sounds scary, but to more seasoned SEO professionals it’s not – sometimes the best action is to just delete the page and 301 redirect the URL. On a very large content site, I’ve noindex tagged, canonicalized, or deleted nearly half of the content pages before. Let’s be real, do you think anyone cared about a conference that happened a decade ago, especially when the conference is annual? Probably not.

For duplicate content, check for similar or identical pages that may be competing for rankings. Use canonical tags, 301 redirects, or parameter handling to avoid indexing issues and consolidate ranking signals. A tool I like to check is Google Search Console > Indexing > Pages and look for items with “canonical” especially ones where Google uses a different canonical than the ones you’re suggesting. Also, Screaming Frog is amazing at this, but the tool takes some configuration to uncover near duplicate content. And as you guessed – Ahrefs can do this as well. After you’ve run a crawl from “Site Audit” go to the “Content Quality Report” and that’s where you can find duplicate and near duplicate content in Ahrefs without a canonical.

Regularly auditing and improving content ensures your site remains relevant, authoritative, valuable, and competitive in search results.

Step 5: Advanced Technical SEO Audit

Fixing JavaScript SEO Issues (Dynamic Rendering, Lazy Loading)

JavaScript can enhance website functionality but may cause SEO issues if search engines struggle to crawl and index content properly. Common JavaScript-related SEO challenges include content hidden behind client-side rendering, improper lazy loading, and blocked resources.

What’s the Difference Between Server-side & Client-side Rendered

Server-side rendered (SSR) sites generate and deliver fully rendered HTML from the server, making them easier for search engines to crawl, while client-side rendered (CSR) sites rely on JavaScript to load content in the browser, which can cause indexing challenges if not properly optimized.

1 – Dynamic Rendering for Better Indexing

Search engines, especially Google, can render JavaScript, but it’s not always perfect. If your important content (text, links, metadata) is dynamically generated using JavaScript, Google may not index it properly. To fix this:

- Implement dynamic rendering, where bots receive a pre-rendered HTML version while users see the JavaScript version. Tools like Rendertron or Puppeteer can help.

- Use server-side rendering (SSR) for critical pages to ensure search engines immediately see the full content.

Prominent Example (who’s not my client): The company Nespresso is a site that comes to mind for a JavaScript Client-side rendered site. While it’s beautiful, and has a great user experience, it poses SEO challenges as Google may struggle to crawl and index key content effectively. As a result, Nespresso faces difficulties ranking competitively for non-branded keywords like “automatic espresso machine” or “coffee machines,” as Google may not fully process product details, category pages, and other important elements. Without server-side rendering (SSR) or dynamic rendering, their organic visibility remains heavily reliant on branded search terms, limiting their reach in broader search queries. Now have a brain, how are they still successful? Because Nespresso has a very large brand name they can ride, odds are – you won’t. Can it be better, without a doubt!

How to Test if a Site is SSR or CSR

Method 1: Disable JavaScript and Reload the Page

- Open Google Chrome (or any browser with DevTools).

- Right-click anywhere on the page and select Inspect (or press Ctrl + Shift + I on Windows / Cmd + Option + I on Mac).

- Go to the Console tab and click the three dots menu (⋮) in the top right.

- Navigate to Settings > Debugger and check the box for “Disable JavaScript” (or in Firefox, go to about:configand set javascript.enabled to false).

- Reload the page (F5 or Ctrl + R).

- If the content is still visible, the site is server-side rendered (SSR) because the HTML was loaded before JavaScript execution.

- If the page is mostly blank or missing key content, it is client-side rendered (CSR) because the content relies on JavaScript to load.

Method 2: Disable JavaScript via Settings & Reload

- Click the three dots menu (⋮) in the top right and click settings

- Click “Privacy and security” in the left menu

- Click “Site settings” in the middle

- Scroll down to the Content section, then click JavaScript

- Under Default behavior select the option for “Don’t allow sites to use JavaScript”

- Go back to the window you’re testing and Reload the page (F5 or Ctrl + R)

- If the content is still visible, the site is server-side rendered (SSR) because the HTML was loaded before JavaScript execution.

- If the page is mostly blank or missing key content, it is client-side rendered (CSR) because the content relies on JavaScript to load.

2 – Proper Lazy Loading Implementation

Lazy loading helps improve page speed by deferring offscreen content (like images and videos) until needed. However, if not done correctly, search engines may not index these elements. To ensure proper indexing:

- Use native lazy loading by adding loading=”lazy” to <img> tags.

- Avoid lazy loading critical content above the fold that should be visible immediately.

- Ensure lazy-loaded elements are present in the HTML so Google can discover them.

3 – Allow Google to Crawl JavaScript Files

Sometimes, robots.txt blocks JavaScript files, preventing search engines from rendering pages correctly. Check your robots.txt file to ensure essential scripts aren’t blocked. Use Google Search Console’s URL Inspection Tool to see how Googlebot renders your pages.

By addressing these JavaScript SEO issues, you ensure search engines can crawl, render, and index your site correctly, leading to better rankings and user experience. If you have a CSR site and are thinking “Nespresso doesn’t do this, and they’re successful. Do I really need to do this?” Go back and read the sentence starting with “Now have a brain.”

Schema Markup: How to Optimize Structured Data

Schema markup is a type of structured data that helps search engines understand your content better, enhancing how your pages appear in search results with rich snippets like star ratings, FAQs, and product details. Implementing schema correctly can improve click-through rates (CTR) and search visibility. Also, Schema.org is the industry standard and what Google uses to understand structured data. The frustrating part (and there are many when it comes to Google), their structured data tool doesn’t always match Schema.org’s tool on whether your Schema markup is formatted correctly.

1 – Choose the Right Schema Type

Identify the most relevant schema markup for your content. Common types include:

- Article – For blog posts and news articles

- Product – For eCommerce product pages (includes price, availability, and reviews)

- Local Business – For businesses with physical locations

- FAQ & How-To – For content that answers common questions

- BreadcrumbList – Helps search engines understand site hierarchy

2 – Implement Schema Mark Up Correctly

- Use Google’s Structured Data Markup Helper to generate schema code easily.

- Implement schema using JSON-LD (officially supported by Google) by embedding the script inside the <head> or <body> of your page.

- For CMS platforms like WordPress, I use plugins like Yoast SEO (Premium), or Schema Pro for easy integration.

3 – Validate and Test Your Markup

- Use Google’s Rich Results Test to check if your schema is correctly implemented and eligible for enhanced search features.

- Use Schema.org’s Validator to ensure there are no errors in your markup. This is where everything checks out, but Google’s tools might still flag warnings and/or errors. I still trust what Schema.org tells me.

4 – Keep Schema Markup Updated

- Regularly audit structured data to ensure it aligns with your content and Google’s latest guidelines.

- Update schema markup when you make changes to product details, FAQs, or business information.

- Don’t let perfection get in the way of “good enough.” There’s lots you can markup, but finding every single little opportunity can be saved for a site with a very mature SEO strategy.

By properly implementing and maintaining schema markup, you increase your chances of appearing in rich snippets, knowledge panels, and featured results, improving both visibility and user engagement.

Handling Canonicalization & Hreflang Tags for Multilingual & Locale Sites

Managing canonicalization and hreflang tags correctly is crucial for SEO in multilingual and multi-regional websites, ensuring search engines index the right version of your content while avoiding duplicate content issues. If you’re a beginner, or managing your own site – I’m gonna assume this likely doesn’t apply to you. However, I’m including it for those SEO professionals who might be using this guide as their checklist.

1 – Canonicalization for Multilingual Pages

Canonical tags (<link rel=”canonical” href=”URL”>) tell search engines which version of a page is the primary (preferred) version to avoid duplicate content conflicts. When handling multilingual pages:

- If the same content exists in multiple languages, each language version should have its own self-referencing canonical tag (e.g., example.com/en/ and example.com/fr/ should point to themselves).

- If translations are exact copies with no localization, you may choose to canonicalize them to the main language version instead of treating them as separate pages.

- Avoid conflicting signals—do not use canonical tags that contradict hreflang tags.

2 – Implementing Hreflang Tags for International SEO

Hreflang tags help search engines serve the correct language or regional version of a page to users. To set them up properly:

- Use <link rel=”alternate” hreflang=”x” href=”URL”> in the <head> section or XML sitemaps.

- Assign the correct language and country codes (e.g., en-us for English in the U.S., fr-fr for French in France).

- Always include a self-referencing hreflang tag for each version of the page.

- Add an “x-default” hreflang tag for users who don’t match a specific language version.

3 – Common Pitfalls to Avoid

- Mixing canonical and hreflang incorrectly – Hreflang should be used to indicate alternate versions, while canonical should reference the same language version.

- Forgetting to bidirectionally link pages – If example.com/es/ references example.com/en/, then example.com/en/ must also reference example.com/es/.

- Using incorrect language codes – Ensure you’re using ISO 639-1 language codes and ISO 3166-1 country codes.

To prevent duplicate content issues, you must properly manage canonicalization, and hreflang tags. When done correctly, you’ll improve rankings in the right geographic regions, and enhance the user experience for multilingual / locale audiences.

Step 6: How to Optimize Off-Page SEO

Checking Backlink Quality: Toxic vs. Authoritative Links

Backlinks are a major ranking factor, but not all links are beneficial. While authoritative backlinks from reputable sites can improve your SEO, toxic backlinks from spammy or low-quality domains may seem harmful. However, Google’s algorithm is sophisticated enough to ignore bad links, and disavowing backlinks is rarely necessary unless you receive a manual action from Google.

1 – How to Identify High Quality (Authoritative) Backlinks

Strong backlinks typically come from:

- Trusted, high-authority domains (e.g., well-known publications, industry leaders).

- Relevant sites within your niche.

- Editorially placed links (not paid or manipulated).

- Diverse, natural link profiles rather than excessive links from a single domain.

2 – How to Spot Toxic or Low-Quality Backlinks

Some backlinks may seem problematic, such as:

- Links from spammy, irrelevant, or low-authority websites.

- PBN (Private Blog Network) links designed to manipulate rankings.

- Links from link farms or automated directories.

- Foreign or unusual domain extensions unrelated to your content.

However, despite SEO tools flagging these as “toxic,” Google’s John Mueller has clarified:

“The concept of toxic links is made up by SEO tools so that you pay them regularly.” He also added “Nothing has really changed here – you can continue to save yourself the effort.”

3 – Should You Disavow Toxic Backlinks

Unless you’ve received a manual action in Google Search Console for unnatural links, disavowing backlinks is unnecessary and could do more harm than good. Google’s algorithm already devalues spammy links without penalizing your site, so trying to remove them manually is often a poor use of time.

4 – What to Do with Toxic Backlinks Instead

- Monitor your backlink profile regularly using tools like Ahrefs, and the manual actions in Google Search Console.

- Focus on earning high-quality links rather than worrying about low-quality ones.

- I do the “Skyscraper Method” coined by Brian Dean (Backlinko) without doing the outreach part.

- Research the method, and the part of the Skyscraper Method I don’t do is the email outreach part. Why? I’ve just found the juice isn’t worth the squeeze to do email outreach to industry bloggers, and site owners. It takes a lot of time without a lot of reward. By sharing it in SEO groups, forums, etc., I’ve just found I earn them faster. So my advice is skip email outreach, and just share with groups in your industry.

- Ignore “toxic link” warnings from SEO tools unless there’s clear evidence of a penalty. If you’re an industry professional, you can spend this time doing more valuable things.

By prioritizing quality backlinks and avoiding unnecessary disavowals, you can maintain a strong, natural backlink profile without falling for misleading claims about toxic links.

Using Ahrefs for Link Audits

Regular link audits help you analyze your backlink profile, identify opportunities, and spot potential issues. Ahrefs is my go to tool for this process – but BrightEdge, SEM Rush, Moz, and a plethora of other mainstream SEO tools should have this functionality.

1 – Checking Backlink Quality

- In Ahrefs, go to the Backlink Profile > Backlinks report to see all incoming links, filtering by DR (Domain Rating) to assess quality.

- Use the Lost Links report in Ahrefs to monitor backlink fluctuations.

2 – Identifying Toxic or Spammy Links

- Lots of SEO tools highlight low-quality links (SEM Rush makes this very prominent), and it’s important to be aware of these.

- Just remember that disavowing isn’t necessary unless you have a manual penalty.

3 – Finding Link-Building Opportunities

- Analyze your competitors’ backlinks using Ahrefs’ “Site Explorer” to discover sites linking to them but not you.

- Identify broken backlinks pointing to your site and reclaim them by 301 redirecting old URLs or ones that 404. Again, I love Ahrefs for this because the feature is literally called “Broken backlinks.”

How to Improve Off-Page SEO

Off-page SEO strengthens your website’s authority through high-quality backlinks, brand mentions, and social engagement. Focus on:

- Link-Building – Earn backlinks from reputable sites through guest posting, fixing broken backlink, and the Skyscraper Method (again, look it up for an effective content-driven approach).

- PR & Brand Mentions – Get featured on industry blogs, news sites, and niche publications to build credibility. Brand mentions drives search.

- Social Signals – While not a direct ranking factor, social shares and engagement help increase visibility and also drive traffic to your content.

Consistently applying these strategies enhances your domain authority and improves search rankings. However, some people who do SEO will make backlinks sound like the end-all-be-all to getting a site to rank, I can tell you it’s not. I’ve been able to get client sites to outrank other sites despite having less backlinks. At the end of the day, it’s one of many ranking signals – remember, there are thousands of them.

Step 7: How to Complete a Local SEO Audit (If Applicable)

I know lots of people discovering DRVN for the first time are small companies operating location based businesses (such as landscapers, or store locations) where local SEO strategies are important to you. That’s why I’m including this section. I’ll also admit, very early on in my SEO journey I had a client who operated primarily an eCommerce business. But, it was nearly 2 months into the process of him being a client when I discovered he also had a store front at his warehouse location. He never mentioned it, but I never asked about it either. While it may sound silly, if you’re working with a new client, be sure to ask this question.

Google Business Profile Optimization Checklist

Optimizing your Google Business Profile (GBP) (formerly Google My Business (GMB)) is essential for local SEO and improving visibility in local search results. Follow this checklist to ensure your GBP profile is fully optimized:

- Claim & Verify Your Business: Ensure you have ownership of your GMB profile and complete the verification process.

- Use Accurate NAP Information: Keep your Name, Address, and Phone Number (NAP) consistent across all platforms.

- Choose the Right Business Categories: Select primary and secondary categories that best describe your business.

- Write a Compelling Business Description: Include relevant keywords naturally and highlight what makes your business unique. Put the most important information towards the beginning of your description so users don’t have to click “more” to find it.

- Upload High-Quality Photos & Videos: Add real images of your storefront, products, and team to increase engagement.

- Enable Messaging & Q&A: Allow customers to contact you directly through GMB messaging and answer frequently asked questions.

- Regularly Post Updates: Use Google Posts to share promotions, events, and important updates to keep your listing active. E.g. I have clients in states where 15 inches of snow can fall, and it can be -25 outside – so it’s a great place to announce schedule deviations too.

- Monitor & Respond to Reviews: This is part of your brand reputation management. Engage with customer reviews by thanking positive reviewers and addressing negative feedback professionally.

- Add Business Hours & Holiday Hours: Keep your hours up to date to avoid misleading potential customers. E.g. there’s nothing more frustrating than showing up to a business that requires appointments but it’s not listed on their profile.

- Use UTM Tracking in Your GMB Website Link: Add UTM parameters to track traffic from GMB in Google Analytics.

Check Local Citations and NAP Consistency

Local citations are mentions of your business name, address, and phone number (NAP) across directories, websites, and social platforms—are essential for local SEO rankings. Inconsistent NAP details can confuse search engines and hurt your local search visibility.

1 – Audit Your Existing Citations

- You can use tools like Moz Local, BrightLocal, or Yext to scan for inconsistent or missing citations.

- Alternatively, you can check major directories, including Google My Business, Yelp, Bing Places, Apple Maps, Facebook, and industry-specific listings and directories and making sure wherever your business shows up your NAP matches what’s on your Google My Business and Website.

2 – Ensure NAP Consistency

- Your business name, address, and phone number should be identical across all platforms—avoid variations like “Street” vs. “St.”

- Keep your website URL format consistent (e.g., always use https:// if applicable).

3 – Fix Incorrect of Duplicate Listings

- Claim and update outdated listings to match your official business details.

- Remove or merge duplicate listings to avoid conflicting information.

4 – Maintain Ongoing Accuracy

- Regularly monitor your citations and update them whenever your business moves, changes phone numbers, or rebrands.

- Encourage consistency across social media and other platforms where your business is mentioned.

Don’t overlook the importance of an accurate NAP profile across all citations as it strengthens your local SEO, improves credibility, and helps search engines trust your business information.

How Reviews & Ratings Impact Your Local Rankings

Customer reviews and ratings play a significant role in local SEO rankings, influencing both search engine algorithms and consumer trust. Google prioritizes businesses with high ratings, frequent reviews, and active engagement, making them more likely to appear in local pack results and Google Maps rankings.

1 – How Reviews Affect Rankings

- Quantity & Recency: Businesses with a higher volume of fresh, authentic reviews tend to rank better.

- Star Ratings & Sentiment: Higher average ratings (4.0+ stars) improve credibility and can increase click-through rates (CTR).

- Keyword Usage in Reviews: Reviews that naturally mention products, services, or location-specific terms can help with search relevance.

- Review Engagement: Responding to reviews (both positive and negative) signals to Google that your business is active and values customer feedback.

2 – How to Leverage Reviews for SEO

- Encourage satisfied customers to leave reviews on Google, Yelp, and industry-specific platforms.

- Respond to every review to show engagement and improve brand perception.

- Avoid fake or incentivized reviews, as Google’s algorithm detects and penalizes them.

- Embed Google reviews on your website using structured data to enhance visibility in search results.

Don’t underestimate what strong, authentic reviews can do to boost your local rankings, improve conversion rates, and build customer trust, ultimately leading to more traffic and sales.

Step 8: How to Analyze & Benchmark SEO Efforts

How to Identify Competitors and Analyze Their Strategies

Understanding your competition and their SEO strategies can help you uncover opportunities to improve your own rankings. By analyzing their strengths and weaknesses, you can refine your content, backlink strategy, and technical SEO to gain a competitive edge. This is my favorite part of the SEO audit because it makes me feel like I’m a covert spy.

1 – Identify Your Real SEO Competitors

Your biggest competitors may not always be your actual competitors, and this could for many reasons. The primary ones are usually the size of their business versus yours, how long they’ve been around in comparison, and their product mix versus yours. Maybe one day DRVN will compete against the likes of Neil Patel or Astound Digital, that’s not the case today because most of our marketers work full-time at major agencies, and they contract for DRVN out of a passion for working with small businesses. Therefore potential clients in their ICP (Ideal Customer Profile) aren’t in mine and vice versa.

Instead, I’m a little more object and suggest focusing on websites ranking for your target keywords. To find them:

- If you’re a marketing professional, ask your client who they think their competitors are

- I typically ask for 1 or 2 competitors about their size, 2 that are a little larger, and an aspirational competitor

- Search your primary keywords in Google and note the top-ranking sites

- Next use SEO tools (like Ahrefs) to find additional websites competing for the same organic traffic

- Also, look at Google’s “People also search for” suggestions for related competitors

- Lastly, refine your competitors down to about 3-5 of them that includes ones about, a little larger, and way larger than your business as this gives you benchmarks at multiple levels.

2 – Analyze Their SEO Strategies

Once you’ve identified your competitors, analyze their approach across these areas:

- Keyword Strategy: Use Ahrefs’ Site Explorer (or a similar tool) to see what keywords they rank for that you don’t

- Content Strategy: Identify their best-performing blog posts, landing pages, and FAQs to find content gaps you can fill

- Backlink Profile: Use Ahrefs Link Explorer (or a similar tool) to see where they’re getting backlinks and find potential link-building opportunities

- Technical SEO: Check their site speed, structured data, mobile-friendliness, and site architecture using Google’s PageSpeed Insights and Screaming Frog. YES, I crawl my competitors’ sites to see what pages they have, and you should too.

3 – Find Opportunities to Outrank Them

- Create better, more comprehensive content using the Skyscraper Method

- Build high-quality backlinks from authoritative sites that link to your competitors

- Optimize for long-tail keywords and emerging trends they may be missing

- Improve user experience (UX) and technical SEO to boost rankings

- Also, just because the keyword ranking difficulty is high doesn’t mean you should ignore it. And just because the ranking difficulty is low doesn’t mean that’s all you should focus on. “Now have a brain” and remember content is for your user, so let that influence part of the decision making process.

The internet is at the point of saturation (meaning not a lot of new types of things are being searched), and monitoring your competitors means it increases your ability to take market share from the bigger players in your industry little by little. But if you don’t do this, it means they’re taking from you.

How to Compare Metrics for Benchmarking

Benchmarking your website against competitors helps you understand how your domain authority and backlink profile stacks up. These insights allow you to identify gaps and refine your SEO strategy to stay competitive.

1 – Compare Domain Authority & Strength

- Ahrefs’ Domain Rating (DR) provides a general score of your site’s backlink strength versus your competitors

- While backlinking isn’t everything to competitiveness, it’s still an important factor

- Other tools will have similar comparisons, measuring

2 – Analyze Content Gaps

- Use your SEO tool to find sites linking to your competitors but not to your site

- Determine the effort it would take to create the missing content

- Remember, getting content to rank can sometimes be as easy as creating the missing page in your content pillar. But, sometimes it’s as difficult as having to create multiple missing pages.

- So prioritize filling content gaps with easy “low-hanging fruit, and big impact” first, and work your way back to the harder ones that don’t move the needle as far.

- If you want to do outreach, you can. I just don’t because of the earlier mentioned reasons. I simply prefer to share my posts in community groups specific to my clients’ industry because it seems to earn backlinks much faster with less effort.

- However, this is where a PR firm could help you get some backlinks from high authority sites. Just keep in mind their services generally are not free, but you could utilize platforms such as HARO (Help A Reporter Out) to see if you can land quality backlinks that way too.

3 – Track Competitor Link and Keyword Growth Trends

- Monitor how competitors gain new backlinks and identify patterns in their link-building strategies

- Monitoring how quickly competitors gain keyword rankings can reveal patterns in their content strategies

- Look for broken backlinks pointing to their sites and try to reclaim them for your own content

How to Create Actionable Tasks from Competitor Analysis

I’ve borderline talked about this already in the above subsections. But after you’re done analyzing your competitors’ SEO strategies versus yours, it’s time to convert these learnings into action.

1 – Improve Content Based on Your Content Gap Analysis

- Identify high performing competitor content and create more in-depth, valuable versions (aka Skyscraper Method)

- Target keywords your competitors rank for but you don’t, using Ahrefs’ Keyword Gap Tool (or another similar tool)

- Find underperforming competitor content and create optimized, fresh alternatives to outrank them

- The content I tend to go after are the ones with lower keyword search volume

- This is because competitors tend to not monitor these pages as closely as others

- Is there content you can update? It’s much faster to update content, than to create new fresh content

2 – Update Keyword Optimizations

- Are there keyword variants you’re missing on pages

- Are you using the best ones in meta tags, headers, and copy

- Have you over-optimized keywords where Google considers it stuffing

3 – Strengthen Your Backlink Profile

- If passively sharing to groups isn’t working, then you might want to consider doing email outreach

- Most of the time these emails get ignored, but here are a few hints to increase the probability of success:

- Good email subject line (get the person to open your email)

- Keep your email short, and provide them the page you think you can help them improve

- Give them the link to your page and in a sentence tell them why they should link to you instead

- If needed, write the copy they should use for them so it’s as little effort as possible

- Most of the time these emails get ignored, but here are a few hints to increase the probability of success:

- Engage with your audience in groups, forums, etc, without trying to sell them anything. The purpose is to just establish yourself and/or your brand’s expertise. You’d be surprised at what this can do to generate search for your brand

- Utilize other channels (such as social) to share information. Again, you’re not trying to sell at every moment you get, and the objective is to boost your brand visibility.

4 – Optimize for Technical and UX Improvements

- Check site speed, Core Web Vitals, and mobile-friendliness using Google PageSpeed Insights

- Improve internal linking structure to enhance crawlability and user navigation

- Use schema markup and structured data if competitors leverage rich snippets for higher CTR

5 – Monitor & Adapt Regularly

SEO is a forever effort, and not a one time thing. This is why I complete routine audits semi-annually, and I complete a more condensed audit (I call these “spot audits”) quarterly. It’s important for you to continually monitor your industry to keep a pulse on what’s going on between you and your competitors. By formulating strategies from data, and adjusting as you go – you can close SEO gaps, outperform competitors, and gain a stronger foothold in search rankings.

Step 9: How to Align SEO with Other Digital Strategies

SEO & Content Marketing Synergy

SEO and content marketing go together like peas and carrots. The combo ensures your content drives organic traffic, engagement, and conversions. Rather than treating any of your marketing channels as separate verticals, aligning these strategies maximizes visibility and long-term growth. For instance, creating in-depth guides, downloadable digitals, blog posts, case studies, FAQs, etc., can provide real value to your user audience that can also be used across social channels, email marketing, etc. Just keep in mind, increased visibility, and engagement drives more organic search.

How Paid Ads & SEO Work Together

While SEO and paid search ads are often seen as separate strategies, combining them can create a powerful digital marketing synergy that boosts visibility, traffic, and conversions. Here’s how they work together:

1 – Dominate Search Results & Increase Click-Through Rates (CTR)

- Running Google Ads for keywords you’re already ranking for in organic search helps your brand occupy more real estate in search results

- The top 3, to top 5 organic positions tend to get the lion’s share of clickable search volume

- So if you appear on page 1 but below those positions – having an ad at the top increases your odds of getting a click somewhere

- From a psychological point of view, when a user doesn’t click on your ad at the top of the SERP it increases odds they’ll click on your organic result if they make it to the bottom of the page. But how?

- They’ve already had a little familiarity with your brand name, so even if they can’t remember where it makes them more apt to click your link versus one of your competitors

- Think of it this way, we’ve all been to parties where we know 1 person, so who do you naturally talk to first

- Appearing in both paid and organic listings increases brand credibility and boosts overall CTR

- Some clients only care about their overall CTR from Google (the combination of organic and paid). Most want to know the metrics separately

- I fall into the latter because I don’t like the idea of my clients paying for traffic we’re likely getting organically for free

- However, as Google continues to refine their SERP, which pushes organic results further below the fold; you should be a little more open minded about paid search strategies for keywords where you do appear top 3 organically. This is case-by-case though, and why frequent SERP analysis is an important activity.

2 – Use Google Ads Data to Improve SEO Strategy

- Paid search campaigns provide valuable keyword performance insights, revealing high-converting terms to target in your SEO strategy

- Test ad copy variations to see which headlines and descriptions drive the best engagement, then apply that to title tags and descriptions for SEO

- After all, Google’s paid ads algorithm is designed to maximize ad clicks so they can make more money

- But there are no rules saying that you can’t take findings from paid strategies and apply those to SEO

3 – Retarget Users

- Not all organic visitors convert immediately, and with lots of my clients, their organic search channel acts as both a converting and assisting channel. This is where the beauty of retargeting ads helps bring back users by showing tailored ads across the Google Display Network, YouTube, and social media.

- Engaging in retargeting campaigns keeps your brand in the minds of your users and increases conversion rates over time

4 – Paid Ads Help During SEO Growth Phases

It’s no secret that it takes time for new sites to gain rankings organically. However, your site and or executive team might not be so keen to that idea. And this is is where paid ads is important because you can immediately get to the top of the SERP.

- Google PPC (pay per click) can deliver instant traffic. Running ads for important keywords while working on your organic growth strategies ensures you don’t miss potential customers. This is why I tend to suggest a Paid Media and SEO engagement with DRVN to prospective clients.

- PPC is also great at targeting users for new product launches/segments, and/or seasonal promotions where SEO hasn’t yet gained traction. It’s like playing Mike Tyson’s Punch Out on Nintendo – where SEO is playing all the levels to get to Tyson, and PPC is the code to get to him immediately. But in this example, your user audience is slightly intimidating.

5 – Build Targeted Landing Pages for Both SEO & Paid

- Optimized landing pages improve Quality Score in Google Ads while also benefiting organic search rankings.

- A strong conversion-focused page supports both paid and organic traffic, maximizing results.

Properly aligning SEO and paid ads, you create a well-rounded strategy that maximizes traffic, enhances conversions, and strengthens your digital marketing presence while reducing redundant effort.

Common SEO Issues Found in Audits (Cliff’s Notes)

Indexing Issues and Google Penalties

I come across indexing issues more often than Google penalties, but both can prevent your pages from appearing in search results which significantly impacts your ability to appear in search results.

If your pages aren’t showing up in search results, check for these indexing problems:

- Blocked by robots.txt: Ensure your robots.txt file isn’t accidentally blocking important pages from being crawled (Disallow: /).

- Noindex Tags: Check your meta tags (<meta name=”robots” content=”noindex”>) to ensure key pages aren’t excluded from indexing.

- Canonicalization Conflicts: Incorrect canonical tags may cause Google to ignore duplicate versions of a page.

- Duplicate or Thin Content: Pages with little value may not be indexed. Improve content depth and uniqueness.

- Crawl Budget Issues: Large sites should prioritize important pages using internal linking and sitemaps. Most of you reading this post probably don’t need to worry about crawl budget issues, and need to focus more on page speed and CWV.

Use Google Search Console (GSC) to diagnose indexing problems under the “Indexing” report and submit pages for reindexing. Remember, reindexing is just a suggestion, and doesn’t guarantee your page will be indexed.

Google Penalties: Algorithmic vs. Manual Actions

Google penalties can be either algorithmic (due to core updates like Panda, Penguin, or Helpful Content) or manual actions (applied by Google’s web-spam team). Most of the time when a site claims they lost all their organic traffic due to a recent Google Core update, I’ve been able to find things they were doing or did wrong, and/or they were already on a downward trajectory and the core update was a catalyst that just sped it up. But there have been some outliers to that statement.

Algorithmic Penalties

- Loss of performance from Core Updates are usually triggered by not following best content practices like thin content, excessive ads, or unnatural backlinks.

- Recover lost performance by improving content quality, removing bad backlinks (if these are flagged in GSC), and enhancing user experience. And YES – excessive popups, ads, and constantly changing page layouts lead to poor user experiences which more often than not leads to decreases in organic performance.

Manual Actions

- Go to Google Search Console > Manual Actions to see if you have any of these. 99.9% of the time it’s a result of black-hat SEO tactics such as cloaking, keyword stuffing, and unnatural link schemes. For example: I once consulted for a business who came to me because they had a sudden drop in organic traffic… their Digital Marketing Manager bought backlinks off of Fiverr – yikes!

- Fix issues, request reconsideration, and follow Google’s Webmaster Guidelines to regain rankings

Duplicate Content

Duplicate content can confuse search engines, dilute ranking signals, and prevent the right pages from appearing in search results. Most of the time when I come across this, it was a technical error of some sorts. But there have been times when I’ve caught my clients’ content teams reposting old content just to give the appearance of completing work. And I’m talking carbon copies, which isn’t an honest mistake.

Commonly Found Duplicate Content Issues

- Multiple URL variations (E.g. example.com/page, example.com/page/, www.example.com/page)

- HTTP vs. HTTPS versions and/or www vs. non-www inconsistencies

- Query Strings, session IDs, tracking parameters, and pagination issues

- RSS Feed Content, plagiarized/copied content across multiple pages or syndication without proper attribution

Use Screaming Frog, Ahrefs (or similar SEO tool), or Google Search Console to find duplicate pages.

Fix Duplicate / Similar Content with Canonicalization

A canonical tag (<link rel=”canonical” href=”URL”>) tells search engines which version of a page is the preferred one to index. Remember, this is a suggestion, and not a directive – Google does NOT have to follow it.

- Every page should have a canonical tag, even if it’s a self-referencing one because it’ll reinforce its original version

- For similar content variations (e.g., product pages with different colors), canonicalize to the primary version

Use 301 Redirects When Necessary

- Redirect duplicate URLs to the main version using 301 redirects to consolidate ranking signals

- Ensure logic is built in for HTTP / HTTPS, and www / apex versions of URLs are correctly redirected to avoid split indexing

Optimize Internal Linking & Sitemaps

- Use consistent internal linking to point to the original version of a page

- Update your XML sitemap to only include canonical URLs

- Submit your XML sitemap in Google Search Console – you’d be surprised how often I find no sitemap submitted in GSC. Also, you’d be surprised at how often I find GSC isn’t even setup when speaking to a prospective client

I’ve Read This, Now What?

An SEO audit is just the first step, and it’s a really, really long one (almost as long as this guide). Now you need to turn your findings into action, that’s what drives success. Prioritizing fixes, using the right tools, and maintaining regular audits will help keep your website optimized and competitive in search rankings.

Prioritizing Fixes & Creating an Action Plan

After completing your audit, categorize issues based on their impact on rankings and user experience – this essentially becomes your task list.

- High Priority Items: Critical issues such as indexing problems, slow page speed in comparison to your competitors, broken links (internal, external, and backlinks), poor user experience, or other major technical errors

- Moderate Priority Items: Content updates, content gaps, metadata optimization, internal linking improvements, and structured data fixes

- Low Priority Items: Minor aesthetic-only design enhancements (unless it significantly improves the UX, it’s probably minor), additional schema markup, and fine-tuning content structure

I like to breakdown tasks in a phased, step-by-step action plan so it gives me milestone dates and goals. Will it be perfect, no and that’s where you need to have the ability to adapt. But charting these items out on a timeline, helps you see a path towards your goals.

Do You Need an SEO Tool?

It’s really, really hard to do SEO without an SEO tool, but I get it, not everyone has the budget for tools. There are free tools, and creative ways to research things – but you do hit a ceiling quickly. My suggestion is Ahrefs, and they have plenty of pricing tiers. If BrightEdge had a small agency plan even for access to just DataCube, I would subscribe to that. Unfortunately, they don’t.

Do You Really Need to Complete an SEO Audit Regularly?

If the performance of your site doesn’t matter to you, then no you don’t. As crazy as it may sound, I know of people who use their website just as additional proof of who they are, and they like to get all their business offline (yes those people still exist). However, for most of you – a semi-annual SEO audit should be done, and a quarterly spot-audit should suffice.

What should be included in those spot audits? Unfortunately, I can’t give you an exact answer because it depends on the site, and the maturity of the SEO program. But regularly auditing your site ensures you stay up to day with Google’s Core Algorithm Updates, catch technical or content issues before it impacts your rankings, and it helps you keep your site relevant. It’s kinda like “maintaining a car” – do you have to do it? No, but if you want your site to keep performing and get better over time then it’s a necessity.

I hope my SEO audit checklist helps you better understand what I audit, and why. If there’s anything I, or my agency, DRVN Media, can do to help you – please don’t hesitate to reach out to us– this includes just reaching out to us to ask questions about SEO. If you’re looking to hire an SEO Agency to manager your organic efforts, please check out our SEO Services page.

Other Articles You Might Be Interested In:

- SEO Strategies: 2025 Guide to Setting SEO Strategies

- SEO Audits: Important Elements of an SEO Audit (This one is more about the importance of SEO audits)

- Content Quality: Quality is Always More Important than Quantity

- Crawling a Site for the First Time: How to Setup Screaming Frog

About the Author:

Vinh Huynh is Digital Marketing strategist, specializing in SEO & Paid Search, and also the owner of DRVN. He helps businesses improve their online visibility, through data-driven strategies. Outside of Digital Marketing, you can find him coaching the sport of Olympic Weightlifting, and networking with entrepreneurs, and local businesses.